Biography

Hey! I am a PhD Student at the Centre for Cognitive Science at the Technical University of Darmstadt. After collecting technical experience in Information Systems Engineering and Computer Science, I decided to put these skills into action in the domain of Cognitive Science. My research interests include computational (Bayesian) modeling, inverse decision-making in human behavior, as well as (the interplay of) perception & action. I am always eager to learn about new methods from Machine Learning and how they can be used to improve our understanding of human behavior or vice versa can be improved by our knowledge of human cognition.

My Master’s Thesis on the inference of cost functions in continuous decision-making was supported by the research cluster “The Adaptive Mind” by the Hessian Ministry of Higher Education, Research, Science and the Arts.

Besides my academic interests, I am also a team leader at Engineers Without Borders Darmstadt and have always been dedicated and committed to sustainability.

- Computational Modeling

- Inverse Decision-Making

- Perception and Action

- Machine Learning

PhD in Cognitive Science, Since 2024

Centre of Cognitive Science, Technical University of Darmstadt

M.Sc. in Autonomous Systems, 2024

Technical University of Darmstadt

B.Sc. in Cognitive Science, 2022

Technical University of Darmstadt

B.Sc. in Information Systems Engineering, 2020

Technical University of Darmstadt

Skills

Projects

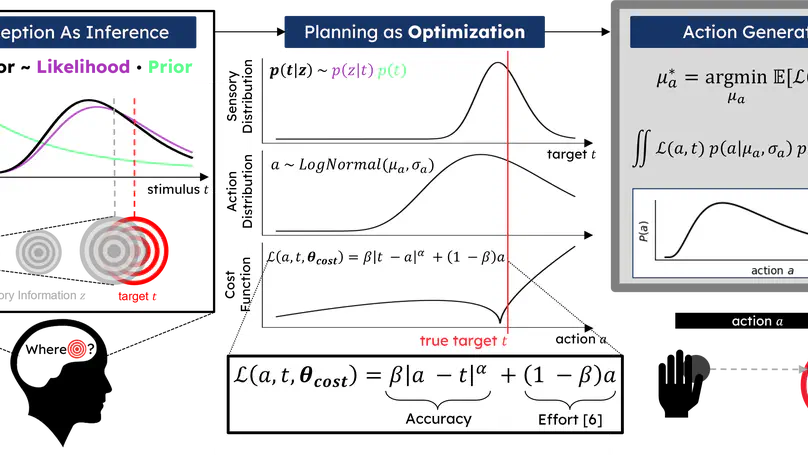

Bayesian observer and actor models have provided normative explanations for behavior in many perception & action tasks including discrimination tasks, cue combination, and sensorimotor control by attributing behavioral variability and biases to factors such as perceptual and motor uncertainty, prior beliefs, and behavioral costs.

However, it is unclear how to extend these models to more complex tasks such as continuous production and reproduction tasks, because inferring behavioral parameters is often difficult due to analytical intractability. Here, we overcome this limitation by approximating Bayesian actor models using neural networks. Because Bayesian actor models are analytically tractable only for a very limited set of probability distributions, e.g. Gaussians, and cost functions, e.g. quadratic, one typically uses numerical methods. This makes inference of their parameters computationally difficult. To address this, we approximate the optimal actor using a neural network trained on a wide range of different parameter settings. The pre-trained neural network is then used to efficiently perform sampling-based inference of the Bayesian actor model’s parameters with performance gains of up to three orders of magnitude compared to numerical solution methods. We validated our proposed method on synthetic data, showing that recovery of sensorimotor parameters is feasible. Importantly, individual behavioral differences can be attributed to differences in perceptual uncertainty, motor variability, and internal costs.

We finally analyzed real data from a task in which participants had to throw beanbags towards targets at different distances and from a task in which subjects needed to propel puck to different target distances. Behaviorally, subjects differed in how strongly they undershot and overshot different targets and whether they showed a regression to the mean over trials. We could attribute these complex behavioral patterns to changes in priors because of learning and undershoots and overshoots to behavioral costs and motor variability. Taken together, we present a new analysis method applicable to continuous production and reproduction tasks, which remains computationally feasible even for complex cost functions and probability distributions.

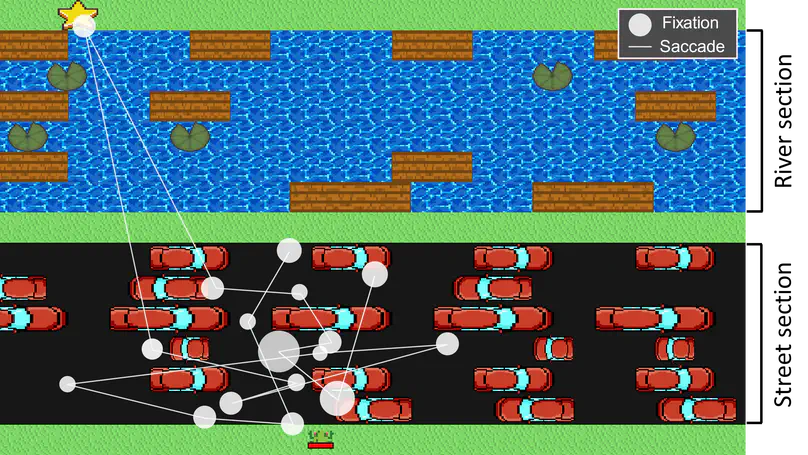

Humans can employ sophisticated strategies to plan their next actions while only having limited cognitive capacity. Most of the studies investigating human behavior focus on minimal and rather abstract tasks. We provide an environment inspired by the video game Frogger, especially designed for studying how far humans plan ahead. This is described by the planning horizon, a not directly measurable quantity representing how far ahead people can consider the consequences of their actions. We treat the subjects’ eye movements as externalization of their internal planning horizon and can thereby infer its development over time.

We found that people can dynamically adapt their planning horizon when switching between tasks. While subjects employed bigger planning horizons, we could not measure any physiological indicators of stronger cognitive engagement. Subjects using larger planning horizons were able to score higher in the game. We designed neural networks for predicting the subject’s planning horizon in different situations, providing further insight on the key features needed for accurate predictions of the planning horizon. In general, models trained only on subject-specific data achieved higher accuracy than models trained on data collected from all subjects.

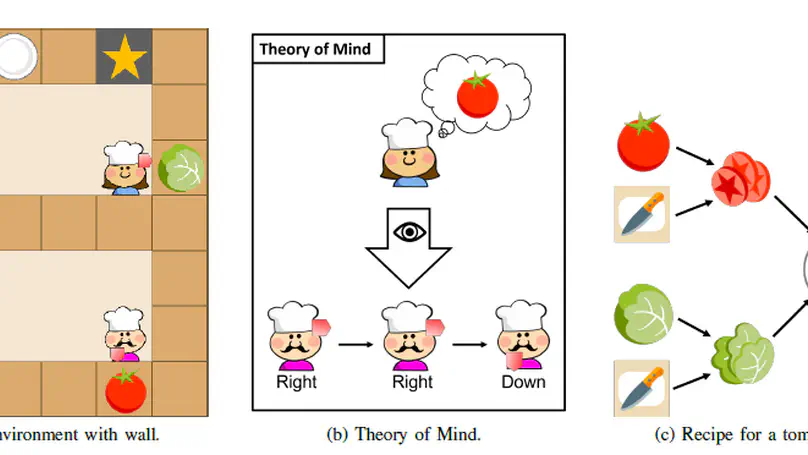

Humans cooperate with each other on an everyday basis using some form of Theory of Mind (ToM). This term describes a human’s ability to infer the mental state of another person, e.g. the person’s beliefs or goals, which in turn enables more efficient interaction and cooperation. Robots do not inherently have a similar concept, thus implementing a ToM for robotic agents could potentially enable much more natural Human Robot Interaction. In this paper, we explore existing ToM approaches to infer the mental states of a human interaction partner. In addition, two different approaches are implemented to estimate the goal of another agent given the past environment states. The estimated goal is then used as input for a Reinforcement Learning module that learns our agent’s optimal behavior. The proposed methods are evaluated in the Overcooked environment. We are able to show that we can successfully infer the goal of the other agent and use this goal to learn a policy. Yet at the same time, our results show that ToM models are not always as useful or necessary as they seem. The environmental conditions and evaluation metrics can not be chosen as straightforward as one might think and require more thought and research.

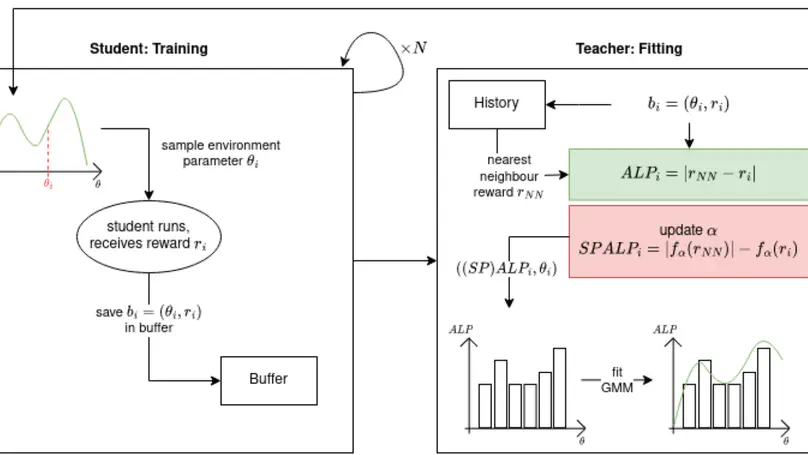

The usability of Reinforcement Learning is restricted by the large computation times it requires. Curriculum Reinforcement Learning speeds up learning by defining a helpful order in which an agent encounters tasks, i.e. from simple to hard. Curricula based on Absolute Learning Progress (ALP) have proven successful in different environments, but waste computation on repeating already learned behaviour in new tasks. We solve this problem by introducing a new regularization method based on Self-Paced (Deep) Learning, called Self-Paced Absolute Learning Progress (SPALP). We evaluate our method in three different environments. Our method achieves performance comparable to original ALP in all cases, and reaches it quicker than ALP in two of them. We illustrate possibilities to further improve the efficiency and performance of SPALP.

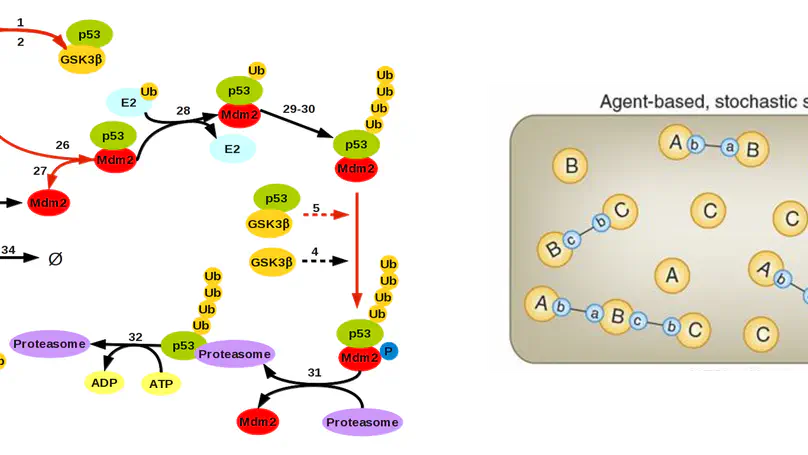

Modern biochemistry opens new perspectives in understanding and finding remedies for diseases like cancer, diabetes or Alzheimer’s, where regulatory mechanisms of cells in an organism’s metabolism fail. This is made possible due to broad and highly specialized knowledge in biochemical contexts, obtained by computer-based simulations of diverse cell and enzyme interactions.

This work focuses on the simulation of such interactions via the rule-based method. Herein, the behavior of complex biochemical process in a system is split into several reoccuring patterns, to be completely modeled and simulated by the use of pattern matching tools and the according model transformations.

Already existent and well-established specifications such as Kappa or the BioNetGenLanguage provide extensive possibilities to model such systems and simulations employing domain-specific languages. Still, these have issues in terms of their intuitive comprehensibility and general usability nonetheless. In regard to those parameters a specification for such rule-based simulations and a corresponding framework for integrating it into the already existent simulation tool SimSG is developed and implemented. Finally this new language is evaluated with respect to the intended optimization of the given aspects and the pattern matching tools used for simulation are compared based on different models of various types.

Scholarships

Scholarship issued for my Master’s thesis Approximate Bayesian Inference of Parametric Cost Functions in Continuous Decision-Making by the research cluster ‘The Adaptive Mind’, funded by the Hessian Ministry of Higher Education, Research, Science and the Arts. The Adaptive Mind focuses research regarding the mechanisms behind human cognition that allow us to quickly adapt to our uncertain and rapidly changing world.

Experience

Responsibilities include:

- Conducting Experiments

- Analysing Data

- Modelling of Human Behavior

Regarding human perception, especially the domain of active vision.

Teaching groups of students in the subjects

- Logic Design

- Mathematics (Statistics and Numerical Methods)

Also creating the exercises for the subject and correcting exams.