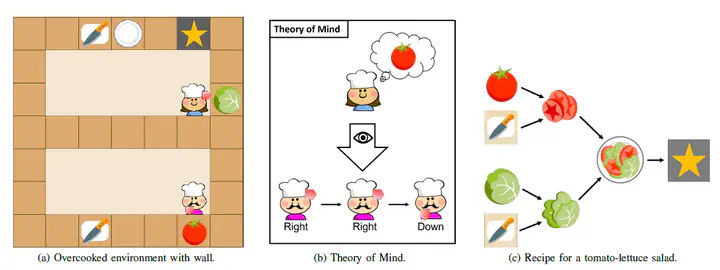

Theory of Mind Models for Human Robot Interaction under Partial Observability

Abstract

Humans cooperate with each other on an everyday basis using some form of Theory of Mind (ToM). This term describes a human’s ability to infer the mental state of another person, e.g. the person’s beliefs or goals, which in turn enables more efficient interaction and cooperation. Robots do not inherently have a similar concept, thus implementing a ToM for robotic agents could potentially enable much more natural Human Robot Interaction. In this paper, we explore existing ToM approaches to infer the mental states of a human interaction partner. In addition, two different approaches are implemented to estimate the goal of another agent given the past environment states. The estimated goal is then used as input for a Reinforcement Learning module that learns our agent’s optimal behavior. The proposed methods are evaluated in the Overcooked environment. We are able to show that we can successfully infer the goal of the other agent and use this goal to learn a policy. Yet at the same time, our results show that ToM models are not always as useful or necessary as they seem. The environmental conditions and evaluation metrics can not be chosen as straightforward as one might think and require more thought and research.